On November 30, 2022, Silicon Valley-based company OpenAI launched its artificial-intelligence-powered chatbot, ChatGPT. Overnight, AI transformed in the popular imagination from a science fiction trope to something anyone with an internet connection could try. ChatGPT was free to use, and it responded to typed prompts naturally enough to seem almost human. After the launch of the chatbot, worldwide Google searches for the term “AI” began a steep climb that still does not seem to have reached its peak.

Physicists were some of the earliest developers and adopters of technologies now welcomed under the wide umbrella term “AI.” Particle physicists and astrophysicists, with their enormous collections of data and the need to efficiently analyze it, are just the sort of people who benefit from the automation AI provides.

So we at Symmetry, an online magazine about particle physics and astrophysics, decided to explore the topic and publish a series on artificial intelligence. We looked at the many forms AI has taken; the ways the technology has helped shape the science (and vice versa); and the ways scientists use AI to advance experimental and theoretical physics, to improve the operation of particle accelerators and telescopes, and to train the next generation of physics students. You can expect to see the result of that exploration here in the coming weeks.

What you should not expect to see is anything written by AI. ChatGPT is an amazing technological accomplishment, but it is not a science writer. A large language model like ChatGPT can summarize information that has been published already, but it cannot update you on anything absent from its training dataset. ChatGPT can fill the gaps in its knowledge with plausible combinations of words, but those words will not necessarily reflect the truth.

Automation, including with the support of AI, has been extremely useful to writers. A search engine can help a reporter find background information and sources. A translator can help them get the gist of a paper published in a different language. A spell-checker can bring to a writer’s attention words they should subject to extra scrutiny. And a text-reader can help them catch mistakes and graceless phrasing they might have missed in a silent read.

But prompting ChatGPT to write an article means skipping two important processes.

The first process is reporting, which is where the article begins to take shape. Reporting involves taking in new information—including information that has not yet been published and may even contradict what you think you already know. It involves connecting with people, both in building understanding with your sources and imagining the needs of your audience.

The second process is writing, which is where the article is finally formed. Writing is much more than arranging facts on a page. Writing is thinking. The writing process is where you make connections between your ideas and come up with new ones, where you discover which of your assumptions stand up to the test of expression.

When an article has no human behind these two steps, it shows. AI can produce something that resembles the thoughtful work it was trained on, but AI is not doing any thinking of its own.

The articles in this publication, and the illustrations that accompany them, are the result of deep work, deliberate thought and actual human cooperation. As a staff member said in a meeting about this series, an article that could be written by AI wouldn’t be a Symmetry article.

But if AI can’t be trusted to write a science article, why should it be trusted to do physics?

In that same meeting about the AI series, many of us who make Symmetry discussed that question. The thing is, AI is a tool—or rather, a collection of tools. Tools can be used, and tools can be misused. For people to make good decisions about whether to use a tool, they need to know how the tool works, how well it does the job, and how it affects the world around it.

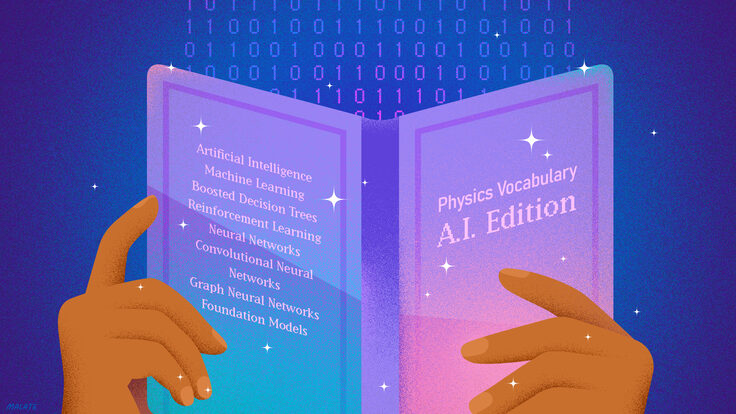

Scientists have been thinking about how to use AI for decades. They are using machine learning to teach algorithms to sort through data and categorize particle collisions or images of the stars. They’re training AI to detect signs that a beam of particles is about to lose focus in an accelerator, and to determine how to best accommodate the many requests for observations at a single telescope. They’re using machine learning as a high-tech calculator to solve problems with more factors than even a supercomputer can handle. Advances in AI are pushing the boundaries of what is possible in particle physics and astrophysics.

And in each of these cases, scientists are still the ones doing the thinking. Experimentalists have to figure out what data to analyze, and once they have their analysis, they must figure out what it means. The operators of the particle accelerator or telescope have to evaluate the suggestions of the AI to determine the right course of action. Theorists have to formulate their calculations and then interpret the results.

In many cases, physicists are automating tasks so specific to their needs that no one has ever automated them before. If they want to use the metaphorical spell-checker or search engine, they must first learn how to build it. When they do, that work can push the boundaries of what’s possible in other fields and in industry as well.

In the end, science is about expanding our knowledge of the universe. This series celebrates the ways that scientists are developing and using AI to help with that. But while you’re reading, please keep in mind that AI is a collection of tools. Even using the best of tools, it still takes deep work, deliberate thought and actual human cooperation to create something new.

Editor's note: This editorial expresses the views of the author.