One of the first versions of AI was a computer that played chess. Developed in the 1950s, it could play a full game without the input of a human—except, of course, the moves of its opponent. It took the computer about eight minutes to make each of its own moves, but the computational breakthrough was the beginning of the end of a world without AI. Today, AI tools are taking on a variety of tasks, including helping to operate complex machines in particle physics and astrophysics.

Just as the chess-playing AI required a human opponent, modern AI systems in control rooms must work together with human operators. And just as practicing against an AI might give a human new ideas for ways to play chess, working with an AI in the laboratory might help humans find new ways to operate machines for science.

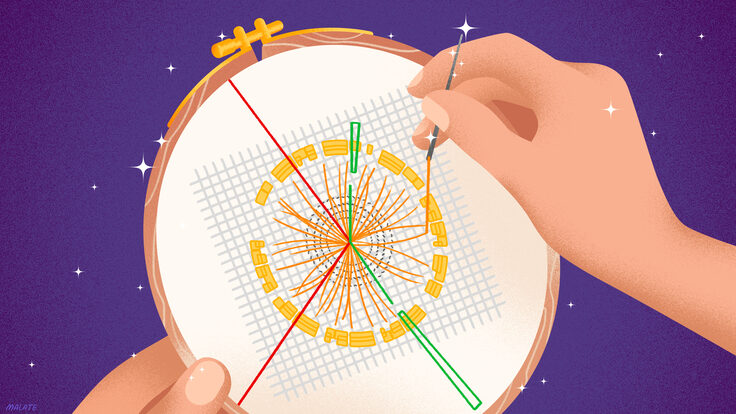

Modeling particles inside accelerators

One commonly used type of AI is machine learning, in which algorithms search out patterns in large datasets without specific instructions on how to do so. At the US Department of Energy’s Fermi National Accelerator Laboratory, physicist Jason St. John writes machine-learning algorithms to help keep particle beams flowing in a particle accelerator.

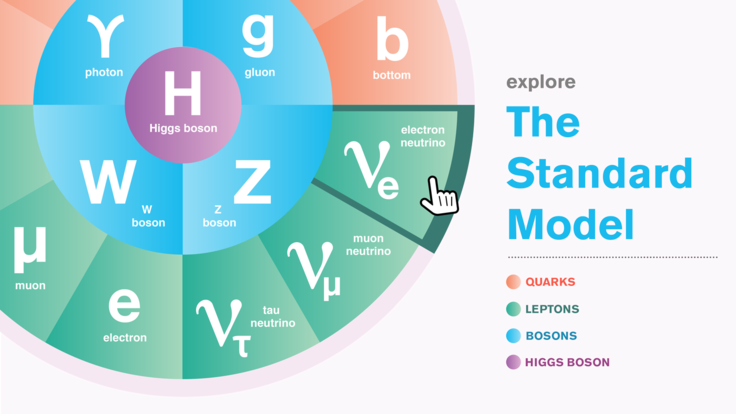

Outside of high-energy physics, the most powerful accelerators are used to drive X-ray lasers, which allow scientists to study chemical, material and biological systems in action. Inside of high-energy physics, the most powerful accelerators collide particles at high energies, which allows scientists to study the fundamental constituents of the universe. Scientists are developing algorithms to help with the operation of both types of accelerators.

Operating a world-leading particle accelerator is its own profession, requiring years of apprenticeship and on-the-job training. At Fermilab, for example, operators are constantly monitoring and tweaking accelerator settings to keep particle beams circulating and focused at record-setting intensities.

In the control room for a particle accelerator, an alarm system indicates when a beam is about to fail. What the alarm system doesn’t look for are the subtle misalignments of the beam or other trends that may occur a fraction of a second before the alarm. If those problems could be detected—and, just as importantly, immediately addressed by a trained machine-learning algorithm, St. John says, the beam could keep running and scientists could wring even more science out of each hour of the day.

To build these types of algorithms, St. John works directly with accelerator operators and machine experts. Operators have the expertise to say what is worthy of automation, and St. John’s team has the expertise to determine if a solution to a problem can be programmed. “Our work is a cooperative effort,” he says. “There will be problems that a machine-learning system doesn’t predict well, so you’ll always need a human there to make decisions, too.”

Telescope scheduling

In astrophysics, scientists rely on powerful telescopes to help reveal the unknowns of the universe. The astrophysics community has a wide range of scientific questions to investigate, but they don’t have the resources to build a whole new telescope to address each one individually, says Fermilab scientist Brian Nord. So, they have to make compromises—like figuring out how to use a single, versatile telescope to address many different questions at once.

The problem is that the observation schedules for the world’s most powerful telescopes are jam-packed, making it hard for astrophysicists to collect the data they need.

Back in 2015, Nord thought about a new tool to help with this problem: He realized AI could help astrophysicists arrange telescope schedules so that they could pursue multiple very different questions at the same time.

To test this idea, Nord; Peter Melchior, an assistant professor of astrophysical sciences at Princeton University; and Miles Cranmer, an assistant professor in data-intensive science at the University of Cambridge, developed an algorithm that modeled how a telescope could be best used to study a group of 1 billion galaxies. Scientists already had accurate measurements of the galaxies’ positions, but they were missing other key information, like their masses and distances from Earth. Traditionally, gathering this type of information from a billion galaxies takes a long time, sometimes years.

To see if AI could find a way to gather the information more quickly, Nord’s team developed an unsupervised deep-learning model comprising two graph neural networks. GNNs rely on the graph structure—i.e., nodes and edges—of a collection of objects. The team’s GNNs quickly recommended which galaxies to observe first, choosing a non-uniform group to provide a nuanced picture of the universe.

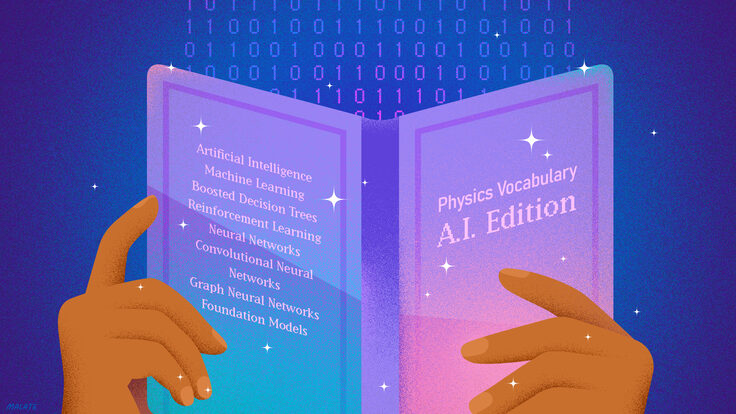

AI for electric grid operators

Developing AI for the operation of complex machines and systems is important outside of particle physics and astrophysics as well. Scientists are also working on AI tools that can help manage the electric grid.

“More energy resources, like solar panels and wind turbines, are connecting to the grid every day,” says scientist Wan-Lin Hu at SLAC National Accelerator Laboratory. “This means that grid operators have more resource options to choose from and sometimes not a lot of time to make those decisions.”

Operators don’t lack the tools to manage electricity flow on the grid, Hu says. In fact, they often have what can feel like too many. A grid operator might open 10 tools to complete a task that must be done within minutes.

Hu and her team at SLAC are working on bridging the communication gap between human operators and AI tools. They want to find ways for algorithms and operators to learn from each other—and then apply these methods to many settings, including the electric grid. They’re not trying to replace human operators; their goal is to develop AI tools that can make decisions easier by presenting operators with a few of the best tool options immediately.

“We might think a fully automated grid system is really cool, but actually we need human decisions along the way for optimum performance,” Hu says. “We want AI tools to learn from the humans and vice versa.”

An example of this is when an algorithm presents two options to an operator. The operator might decide neither option is the right one. If they choose a third option, they can provide that feedback to the AI tool so it will learn from the experience, then give better advice the next time the situation comes up.

In this type of situation, humans are an integral player, and a human-AI relationship is formed and grows closer over time. Hu and other researchers can then analyze not only human acceptance or rejection of AI-suggested options, but also other factors, such as the amount of time it takes to make a decision and the sequence of human actions, to gather comprehensive feedback about an AI tool.

Serendipity in science

AI is helping scientists in control rooms make decisions based on past experience. But a key part of science is dealing with things that past experience has not taught you to expect. When Galileo pointed his telescope toward Jupiter, he did not know that he would be staring at four “stars” orbiting the planet—stars that he soon realized were actually moons.

A serendipitous moment like this creates the opportunity for an epiphany: Integrating the unexpected into what the scientist already knows can open a new path for science ahead.

But how can you program serendipity into an algorithm?

“I worry about this a lot,” Nord says. “AI tools can be garbage in, garbage out. All a tool is doing in the most basic sense is finding a correlation between an input variable and a labeled variable. If you’re not careful, they’ll do nothing else.”

So, Nord is trying to find ways to leave an AI system open to serendipity.

One possible approach is to build conflict into the AI tool’s search. Scientists often make progress when they take two measurements of the same thing and find that the measurements disagree in significant ways.

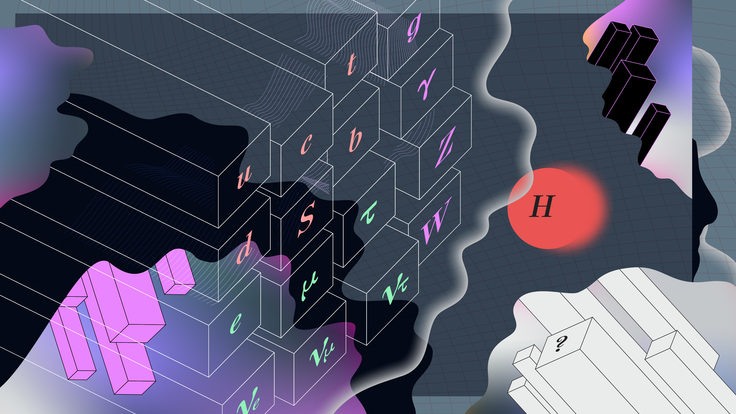

An example of this conflict is the Hubble tension. The Hubble tension is a conflict between two separate measurements of the Hubble constant, used to determine the age and expansion rate of the universe. Scientists can measure this single variable in more than one way: through studies of supernovae and studies of the cosmic microwave background, radiation that was released after the start of the universe.

Over time, researchers have improved both measurements. But throughout, the results have stubbornly refused to converge on a single answer. This matters to astronomers, in large part because it leaves open the question of whether the universe is expanding more quickly than expected, something that could point to the existence of new particles or forces.

If researchers train AI to solve only one specific scientific problem, they bake in bias for a certain type of science, Nord says. But if researchers could develop AI programs that were rewarded for looking in new spaces that might bring to light conflicting results, like the Hubble tension, that could be a boon to scientific research.

“Conflicting results can be real gems,” Nord says. “This means that we could be on to something, that we have found results that are worth pondering further.”