When physicists talk about machine learning, it’s not uncommon to hear them refer to old techniques used “back in 2010.”

That’s not because the field is new. The notion of training an algorithm to take data and use it to make decisions on its own—the definition of machine learning—has been around for at least 70 years.

But with the explosion of big data and computing resources, as well as the development of new techniques within the past decade, machine learning moved out of the theoretical halls of academia and into resource-rich industry.

In the early 2010s, tech companies saw the potential for profits in machine learning, and the balance of innovation shifted. Now, many machine learning advances—including ways to scale up the technique to handle huge amounts of data—come from industry, with big tech betting big on the field as they experiment and explore new technologies.

From the field’s early days and even now, physicists have also played an important role in the development of machine learning, whether through contributing theories that others have used to design machine-learning models or through developing leading-edge techniques of their own.

“Money and capitalism allow industry to move faster,” says Thea Aarrestad, a particle physicist at ETH Zurich. “But the groundwork came from us in pure research.”

Today, physicists work in a triangle of sorts—connected to both industry and computer science academics—to use these approaches to advance high-energy physics and to develop new techniques that can be used across domains.

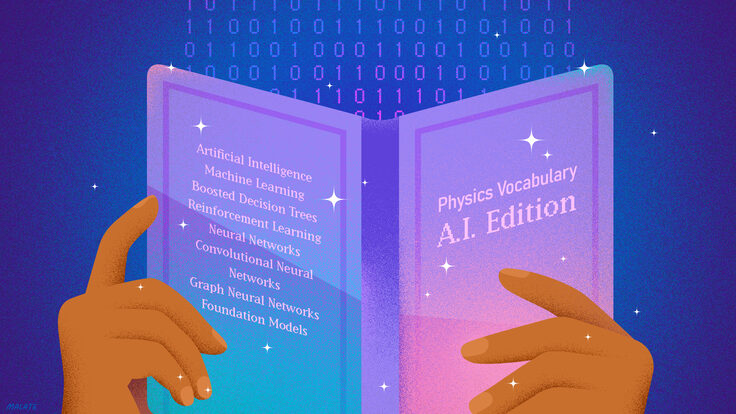

Algorithms inspired by physics

During the long dawn of machine learning—from the 1950s to the early 2000s—“academia propped up fundamental research that was often exploratory,” says Petar Veličković, a staff research scientist at Google DeepMind. “Some of the most exciting research directions that led to the scalable development of AI as we know it right now came from academia.”

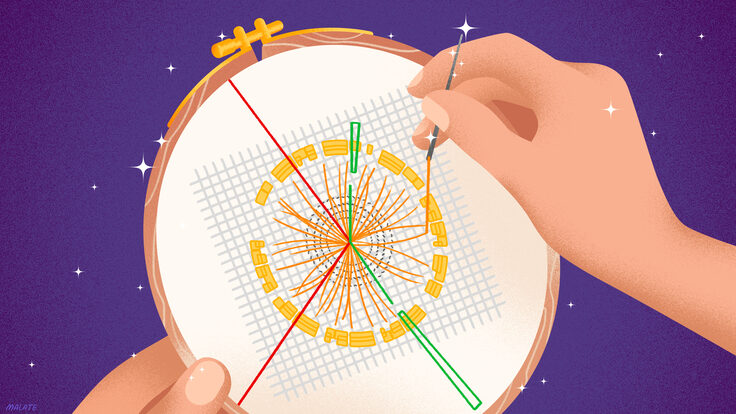

Physics and machine learning in academia have long been intertwined. In the 1960s, physicist Paul Hough developed the Hough transform, a pattern-recognition algorithm, to scan photographs of particle detectors called bubble chambers for particle tracks. Later, computer scientists used the Hough transform in machine-learning applications like computer vision, which enables computers to identify and understand objects in images.

In the 1980s, statistical physics researchers were working with a solid material with interesting magnetic properties called spin glass. They realized that the material’s disorderly magnetization could be a physical manifestation for mathematical rules used in machine learning, shedding light on how machines learn.

Many machine-learning techniques are inspired by ideas and methods in physics. Diffusion models, which are used in machine learning to both generate images and remove noise from them, are directly inspired by non-equilibrium thermodynamics. Researchers add noise to an image and then reverse the process to restore its structure, which creates a new model of the data—an idea used in non-equilibrium statistical physics.

More recently, computer scientist Adji Bousso Dieng, a professor at Princeton University and research scientist at Google AI who works at the intersection of artificial intelligence and science, was inspired by physics in her own work. She developed a technique called the Vendi Score to measure the diversity of a dataset—how different the data points are within a set, an important criterion for making a useful machine-learning system. To create the measurement, she combined definitions of diversity from the field of ecology with equations used in quantum mechanics.

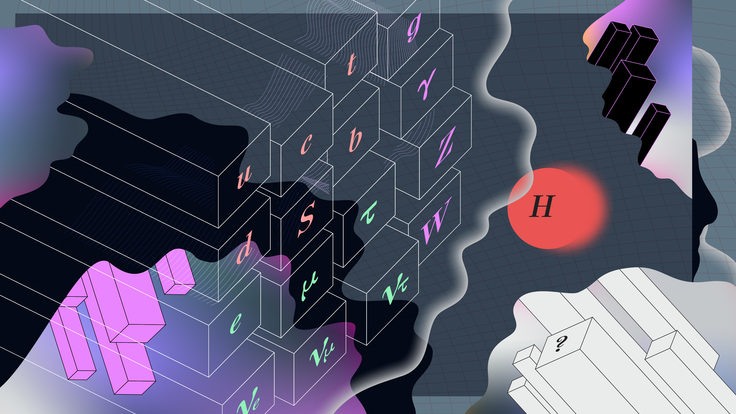

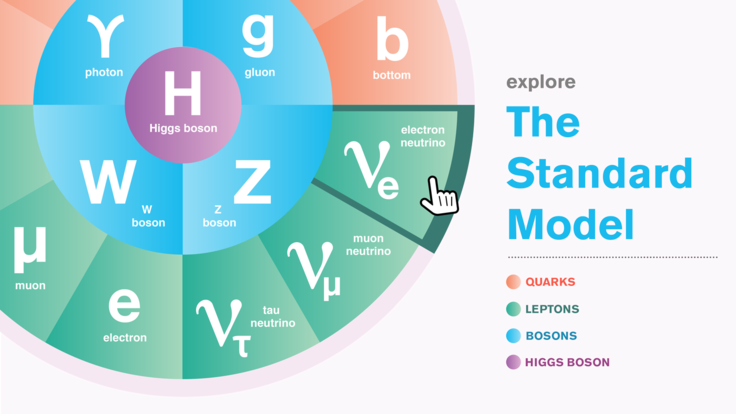

Perhaps the most important contribution particle physics has given machine learning is the idea of symmetry, a principle that plays a role in everything from the Standard Model to quantum mechanics. Today, people are trying to develop machine-learning techniques that can find symmetry in data. By determining all the ways in which data can be arranged without changing its underlying properties, researchers can build more robust machine-learning models.

“I would argue that physics has been quite instrumental in how we understand and build machine-learning systems nowadays, as well,” Veličković says. “There’s a lot of correspondence between the principles we use to build deep-learning architectures and principles we use to build basic models in physics.”

Collaborations with computer science

As computing power and scalable machine-learning techniques emerged after 2010, scientists and engineers in industry began to dominate the field, with big tech companies leading the way on innovations. “In general, industrial positions tend to provide more computational resources, as well as access to interesting data and problems that may not be easy to acquire within academia,” Veličković says.

Today, physicists work with both academic computer scientists and computer scientists in industry to use machine learning to advance high-energy physics. It’s a collaboration that most say is frictionless. After all, many of those in industry spent time in academia to earn their PhDs, so collaborations feel natural.

Computer scientist Gilles Louppe, a professor at the University of Liège, has worked with particle physicists on machine-learning problems at European research center CERN, home of the Large Hadron Collider. At CERN, Louppe helped physicists by showing them how neural networks could explain their experimental data better than the algorithms they previously used. “The goal is to start from the end—the particles or the final observation—and use machine learning to rewind that and recover the model parameters,” he says.

Dieng, too, works with scientists across domains, including by developing molecular simulation techniques and creating machine-learning models that predict crystal properties using their text descriptions. She enjoys working with scientists, learning about their fields, and incorporating their theories and domain knowledge to help them make predictions and discoveries. And as someone who was in graduate school just as the field of machine learning was exploding, she believes the best is yet to come for AI in science.

Still, both Louppe and Dieng find themselves tempering expectations of what machine-learning techniques can do for physics research.

“Physicists sometimes have the impression that a neural network will discover something new, but it can only work with the data you have and the assumptions that were made when designing the network,” Louppe says.

For example, scientists at CERN would like to find anomalies among the many particle detection events that they measure, Louppe says. “But this requires defining normality with high precision, ranging from frequent events to rare but expected events. And if you can’t say that, then a neural network won’t have the necessary referential to detect an anomaly."

An interface with the AI industry

For the first time in history, big tech companies have made huge investments in artificial intelligence and machine learning. Google DeepMind’s AlphaFold system, for example, predicts protein structures from their amino acid sequences (a complex problem involving several forces and mechanisms) and could ultimately play a big role in understanding and treating disease. It has predicted more than 214 million structures, and the database has been used in malaria vaccine research and cancer drug discovery.

In high-energy physics, the relationship can be symbiotic: Industry gives researchers access to the best computing resources, while researchers give industry huge amounts of clean data for testing new AI systems. The LHC, for example, produces 500 terabytes of data per second. That data set has led to fruitful collaborations.

But particle physics has more to offer industry, beyond valuable data.

Phil Harris, a physics professor at MIT who developed machine-learning techniques that helped lead to the discovery of the Higgs boson at the LHC, was part of a team that worked with Microsoft to prototype LHC data analysis problems on the company’s Azure Machine Learning system. He has also received grants from Google Cloud and Amazon Web Services to use those companies’ computing resources to study LHC data.

“In my experience, working with industry has generally been positive,” he says. “They want to work with us, and it’s very collaborative. And it gives us access to computing resources that we don’t normally have.”

To sort through particle collisions at the LHC, physicists have developed real-time processing techniques to filter out collisions that are worth studying. The process is extremely fast—algorithms decide within a billionth of a second whether to keep a particle detection event or not.

When Harris and his colleagues were developing techniques to do this in 2016, they realized that no ultra-fast machine-learning algorithms were available. “We just happened to stumble onto a problem that had not been solved by industry, so we sat down to see just how fast we could make them,” Harris says.

Training algorithms to run on field-programmable gate arrays—semiconductor devices that can process a high volume of data with minimal delay—made the algorithms run more quickly, allowing for more events to be preserved and studied. “Then we realized we could turn what we designed into a software library and directly connect it with industry,” he says.

The result is hls4ml, a software package that provides fast translation of machine-learning models to code that can be run on field-programmable gate arrays.

“That drew the attention of the autonomous vehicle industry,” says ETH Zurich’s Aarrestad, who uses machine learning to improve data collection and analysis at the LHC.

Self-driving cars must make quick decisions in real time using the same sort of field-programmable gate arrays. Aarrestad and Harris worked with autonomous vehicle company Zenseact to show that their techniques could also reduce how much power the field-programmable gate arrays use.

In fact, it was not the speed of the techniques that drew the eye of industry—it was the low power consumption. “Let’s say you want a pacemaker or a wearable medical device that could do signal processing on the fly,” Harris says. “Our fast machine-learning techniques allow for that and use less energy.”

Aarrestad is also working with Google to create power-efficient machine-learning algorithms that scientists can use inside LHC detectors, which are cooled to low temperatures. If computers are running algorithms inside those detectors, researchers don’t want them consuming high amounts of energy and heating up the system.

“We have a specific problem we want to solve, and we provide Google with a use case to test new methods,” Aarrestad says. “We are all researchers, and we all want to learn more in our fields. It feels very pure. We do it out of joy.”

The fast machine learning team decided to make their techniques open-source rather than try to commercialize their findings (and become part of industry themselves) because “we’re all committed to physics, and we saw this as a valuable scientific tool,” Harris says. “We didn’t want to be beholden to some industry to make a lot of money.”

A focus on profits, with room for exploration

It’s true that when industry becomes interested in a scientific domain, collaboration can be stifled, since knowledge can be directly linked to profits. The explosion in popularity of large language models like ChatGPT, for example, has led some industry scientists and engineers to hold their cards closer to their chest, physicists and scientists say. Indeed, those in physics who collaborate with industry say they are often kept in the dark about the company’s ultimate goals with a project.

“Before, you could be in industry and produce research that would be presented at the top machine-learning conferences,” Dieng says. “There’s less freedom in industry to do that now.”

Industry will maintain a significant role in AI research, “because they know that it will eventually bring in a lot of money,” Louppe says. But, he says, industry also often chases the hottest trend, like the current focus on large language models. That leaves plenty of room for academia to innovate and collaborate on other problems. “Academia will remain in the game, because this is still a very wide-open field,” he says. “We have too many questions still to answer.”

And in physics, graduate students are increasingly trained specifically on machine learning, because many physicists believe the real insights of the future will come from these trained algorithms.

“Almost every graduate student’s thesis has some aspect of machine learning to it,” Aarrestad says. “Every physicist needs to know how it works, because it’s going to be a huge component of how we do research in the future. It’s going to help us find phenomena that we haven’t thought of yet.”