The Worldwide LHC ComputingGrid is gearing up to handle the massive amount of data expected shortly after the restart of the Large Hadron Collider.

Read how CERN is preparing to take record-setting amounts of data in this Interactions press release.

And read in the press release below how Brookhaven National Laboratory and Fermi National Accelerator Laboratory will lead the US portion of the data distribution.

Tape storage at Brookhaven National Laboratory's Tier-1 Computing Center. Photo Courtesy of BNL.

BATAVIA, IL and UPTON, NY--The world's largest computing grid has passed its most comprehensive tests to date in anticipation of the restart of the world's most powerful particle accelerator, the Large Hadron Collider (LHC). The successful dress rehearsal proves that the Worldwide LHC Computing Grid (WLCG) is ready to analyze and manage real data from the massive machine. The United States is a vital partner in the development and operation of the WLCG, with 15 universities and three US Department of Energy (DOE) national laboratories from 11 states contributing to the project.

The full-scale test, collectively called the Scale Test of the Experimental Program 2009 (STEP09), demonstrates the ability of the WLCG to efficiently navigate data collected from the LHC's intense collisions at CERN, in Geneva, Switzerland, all the way through a multi-layered management process that culminates at laboratories and universities around the world. When the LHC resumes operations this fall, the WLCG will handle more than 15 million gigabytes of data every year.

Although there have been several large-scale WLCG data-processing tests in the past, STEP09, which was completed on June 15, was the first to simultaneously test all of the key elements of the process.

"Unlike previous challenges, which were dedicated testing periods, STEP09 was a production activity that closely matches the types of workload that we can expect during LHC data taking. It was a demonstration not only of the readiness of experiments, sites and services but also the operations and support procedures and infrastructures," said CERN's Ian Bird, leader of the WLCG project.

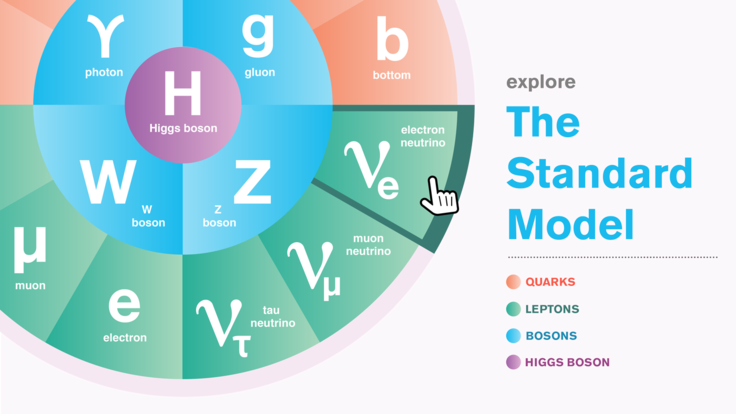

Once LHC data have been collected at CERN, dedicated optical fiber networks distribute the data to 11 major "Tier-1" computer centers in Europe, North America and Asia, including those at DOE's Brookhaven National Laboratory in New York and Fermi National Accelerator Laboratory in Illinois. From these, data are dispatched to more than 140 "Tier-2" centers around the world, including 12 in the United States. It will be at the Tier-2 and Tier-3 centers that physicists will analyze data from the LHC experiments--ATLAS, CMS, ALICE, and LHCb--leading to new discoveries. Support for the Tier-2 and Tier-3 centers is provided by the DOE Office of Science and the National Science Foundation.

"In order to really prove our readiness at close-to-real-life circumstances, we have to carry out data replication, data reprocessing, data analysis, and event simulation all at the same time and all at the expected scale for data taking," said Michael Ernst, director of Brookhaven National Laboratory's Tier-1 Computing Center. "That's what made STEP09 unique."

The result was "wildly successful," Ernst said, adding that the US distributed computing facility for the ATLAS experiment completed 150,000 analysis jobs at an efficiency of 94 percent.

A key goal of the test was gauging the analysis capabilities of the Tier 2 and Tier 3 computing centers. During STEP09's 13-day run, seven US Tier 2 centers for the CMS experiment, and four US CMS Tier 3 centers, performed around 225,000 successful analysis jobs.

"We knew from past tests that we wanted to improve certain areas," said Oliver Gutsche, the Fermilab physicist who led the effort for the CMS experiment. "This test was especially useful because we learned how the infrastructure behaves under heavy load from all four LHC experiments. We now know that we are ready for collisions."

US contributions to the WLCG are coordinated through the Open Science Grid (OSG), a national computing infrastructure for science. OSG not only contributes computing power for LHC data needs, but also for projects in many other scientific fields including biology, nanotechnology, medicine and climate science.

"This is another significant step to demonstrating that shared infrastructures can be used by multiple high-throughput science communities simultaneously," said Ruth Pordes, executive director of the Open Science Grid Consortium. "ATLAS and CMS are not only proving the usability of OSG, but contributing to maturing national distributed facilities in the US for other sciences."

Physicists in the United States and around the world will sift through the LHC data in search of tiny signals that will lead to discoveries about the nature of the physical universe. Through their distributed computing infrastructures, these physicists also help other scientific researchers increase their use of computing and storage for broader discovery.

Read the full press release here.