In 2010, Mike Williams traveled from London to Amsterdam for a physics workshop. Everyone there was abuzz with the possibilities—and possible drawbacks—of machine learning, which Williams had recently proposed incorporating into the LHCb experiment. Williams, now a professor of physics and leader of an experimental group at the Massachusetts Institute of Technology, left the workshop motivated to make it work.

LHCb is one of the four main experiments at the Large Hadron Collider at CERN. Every second, inside the detectors for each of those experiments, proton beams cross 40 million times, generating hundreds of millions of proton collisions, each of which produces an array of particles flying off in different directions. Williams wanted to use machine learning to improve LHCb’s trigger system, a set of decision-making algorithms programmed to recognize and save only collisions that display interesting signals—and discard the rest.

Of the 40 million crossings, or events, that happen each second in the ATLAS and CMS detectors—the two largest particle detectors at the LHC—data from only a few thousand are saved, says Tae Min Hong, an associate professor of physics and astronomy at the University of Pittsburgh and a member of the ATLAS collaboration. “Our job in the trigger system is to never throw away anything that could be important,” he says.

So why not just save everything? The problem is that it’s much more data than physicists could ever—or would ever want to—store.

Williams’ work after the conference in Amsterdam changed the way the LHCb detector collected data, a shift that has occurred in all the experiments at the LHC. Scientists at the LHC will need to continue this evolution as the particle accelerator is upgraded to collect more data than even the improved trigger systems can possibly handle. When the LHC moves into its new high-luminosity phase, it will reach up to 8 billion collisions per second.

“As the environment gets more difficult to deal with, having more powerful trigger algorithms will help us make sure we find things we really want to see,” says Michael Kagan, lead staff scientist at the US Department of Energy’s SLAC National Accelerator Laboratory, “and maybe help us look for things we didn’t even know we were looking for.”

Going beyond the training sets

Hong says that, at its simplest, a trigger works like a motion-sensitive light: It stays off until activated by a preprogrammed signal. For a light, that signal could be a person moving through a room or an animal approaching a garden. For triggers, the signal is often an energy threshold or a specific particle or set of particles. If a collision, also called an event, contains that signal, the trigger is activated to save it.

In 2010, Williams wanted to add machine learning to the LHCb trigger in the hopes of expanding the detector’s definitions of interesting particle events. But machine-learning algorithms can be unpredictable. They are trained on limited datasets and don’t have a human’s ability to extrapolate beyond them. As a result, when faced with new information, they make unpredictable decisions.

That unpredictability made many trigger experts wary, Williams says. “We don’t want the algorithm to say, ‘That looks like [an undiscovered particle like] a dark photon, but its lifetime is too long, so I’m going to ignore it,’” Williams says. “That would be a disaster.”

Still, Williams was convinced it could work. On the hour-long plane ride home from that conference in Amsterdam, he wrote out a way to give an algorithm set rules to follow—for example, that a long lifetime is always interesting. Without that particular fix, an algorithm might only follow that rule up to the longest lifetime it had previously seen. But with this tweak, it would know to keep any longer-lived particle, even if its lifetime exceeded any of those in its training set.

Williams spent the next few months developing software that could implement his algorithm. When he flew to the United States for Christmas, he used the software to train his new algorithm on simulated LHC data. It was a success. “It was an absolute work of art,” says Vava Gligorov, a research scientist at the National Centre for Scientific Research in France, who worked on the system with Williams.

Updated versions of the algorithm have been running LHCb’s main trigger ever since.

Getting a bigger picture

Physicists use trigger systems to store data from the types of particle collisions that they know are likely to be interesting. For example, scientists store collisions that produce two Higgs bosons at the same time, called di-Higgs events. Studying such events could enable physicists to map out the potential energy of the associated Higgs field, which could provide hints about the eventual fate of our universe.

Higgses are most often signaled by the appearance of two b quarks. If a proton collision produces a di-Higgs, four b quarks should appear in the detector. A trigger algorithm, then, could be programmed to capture data only if it finds four b quarks at once.

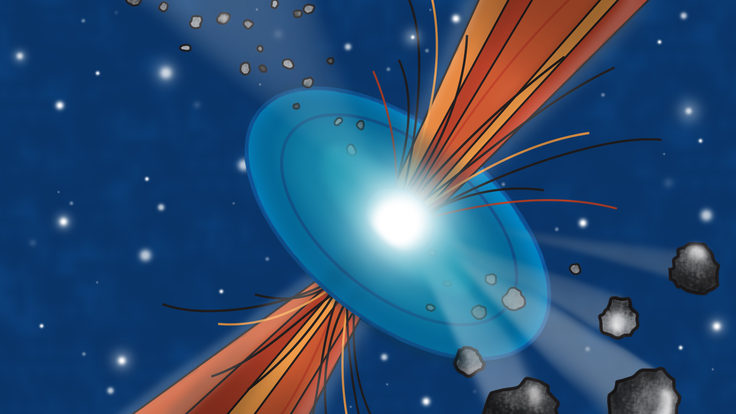

But spotting those four quarks is not as simple as it sounds. The two Higgs are interacting as they move through space, like two water balloons thrown at one another through the air. Just as the droplets of water from colliding balloons continue to move after the balloons have popped, the b quarks continue to move as the particle decays.

If a trigger can see only one spatial area of the event, it may pick up only one or two of the four quarks, letting a di-Higgs go unrecorded. But if the trigger could see more than that, “looking at all of them at the same time, that could be huge,” says David Miller, an associate professor of physics at the University of Chicago and a member of the ATLAS experiment.

In 2013, Miller started developing a system that would allow triggers to do just that: analyze an entire image at once. He and his colleagues called it the global feature extractor, or gFEX. After nearly a decade of development, gFEX started being integrated into ATLAS this year.

Making triggers better and faster

Trigger systems have traditionally had two levels. The first, or level-1, trigger, might contain hundreds or even thousands of signal instructions, winnowing the saved data down to less than 1%. The second, high-level trigger contains more complex instructions, and saves only about 1% of what survived the level-1. Those events that make it through both levels are recorded for physicists to analyze.

For now, at the LHC, machine learning is mostly being used in the high-level triggers. Such triggers could over time get better at identifying common processes—background events they can ignore in favor of a signal. They could also better identify specific combinations of particles, such as two electrons whose tracks are diverging at a certain angle.

“You can feed the machine learning the energies of things and the angles of things and then say, ‘Hey, can you do a better job distinguishing things we don’t want from the things we want?’” Hong says.

Future trigger systems could use machine learning to more precisely identify particles, says Jennifer Ngadiuba, an associate scientist at Fermi National Accelerator Laboratory and a member of the CMS experiment.

Current triggers are programmed to look for individual features of a particle, such as its energy. A more intelligent algorithm could learn all the features of a particle and assign a score to each particle decay—for example, a di-Higgs decaying to four b quarks. A trigger could then simply be programmed to look for that score.

“You can imagine having one machine-learning model that does only that,” Ngadiuba says. “You can maximize the acceptance of the signal and reduce a lot of the background.”

Most high-level triggers run on computer processors called central processing units or graphics processing units. CPUs and GPUs can handle complex instructions, but for most experiments, they are not efficient enough to quickly make the millions of decisions needed in a high-level trigger.

At the ATLAS and CMS experiments, scientists use different computer chips called field-programmable gate arrays, or FPGAs. These chips are hard-wired with custom instructions and can make decisions much faster than a more complex processor. The trade-off, though, is that FPGAs have a limited amount of space, and some physicists are unsure whether they can handle more complex machine-learning algorithms. The concern is that the limits of the chips would mean reducing the number of instructions they can provide to a trigger system, potentially leaving interesting physics data unrecorded.

“It’s a new field of exploration to try to put these algorithms on these nastier architectures, where you have to really think about how much space your algorithm is using,” says Melissa Quinnan, a postdoctoral researcher at the University of California, San Diego and a member of the CMS experiment. “You have to reprogram it every time you want it to do a different calculation.”

Talking to computer chips

Many physicists don’t have the skillset needed to program FPGAs. Usually, after a physicist writes code in a computer language like Python, an electrical engineer needs to convert the code to a hardware description language, which directs switch-flipping on an FPGA. It’s time-consuming and expensive, Quinnan says. Abstract hardware languages, such as High-Level Synthesis, or HLS, can facilitate this process, but many physicists don’t know how to use them.

So in 2017, Javier Duarte, now an assistant professor of physics at UCSD and a member of the CMS collaboration, began collaborating with other researchers on a tool that directly translates computer language to FPGA code using HLS. The team first posted the tool, called hls4ml, to the software platform GitHub on October 25 that year. Hong is developing a similar platform for the ATLAS experiment. “Our goal was really lowering the barrier to entry for a lot of physicists or machine-learning people who aren’t FPGA experts or electronics experts,” Duarte says.

Quinnan, who works in Duarte’s lab, is using the tool to add to CMS a type of trigger that, rather than searching for known signals of interest, tries to identify any events that seem unusual, an approach known as anomaly detection.

“Instead of trying to come up with a new theory and looking for it and not finding it, what if we just cast out a general net and see if we find anything we don’t expect?” Quinnan says. “We can try to figure out what theories could describe what we observe, rather than trying to observe the theories.”

The trigger uses a type of machine learning called an auto-encoder. Instead of examining an entire event, an auto-encoder compresses it into a smaller version and, over time, becomes more skilled at compressing typical events. If the auto-encoder comes across an event it has difficulty compressing, it will save it, hinting to physicists that there may be something unique in the data.

The algorithm may be deployed on CMS as early as 2024, Quinnan says, which would make it the experiment’s first machine learning-based anomaly-detection trigger.

A test run of the system on simulated data identified a potentially novel event that wouldn’t have been detected otherwise due to its low energy levels, Duarte says. Some theoretical models of new physics predict such low-energy particle sprays.

It’s possible that the trigger is just picking up on noise in the data, Duarte says. But it’s also possible the system is identifying hints of physics beyond what most triggers have been programmed to look for. “Our fear is that we’re missing out on new physics because we designed the triggers with certain ideas in mind,” Duarte says. “Maybe that bias has made us miss some new physics.”

The triggers of the future

Physicists are thinking about what their detectors will need after the LHC’s next upgrade in 2028. As the beam gets more powerful, the centers of the ATLAS and CMS detectors, right where collisions happen, will generate too much data to ever beam it onto powerful GPUs or CPUs for analyzing. Level-1 triggers, then, will largely still need to function on more efficient FPGAs—and they need to probe how particles move at the chaotic heart of the detector.

To better reconstruct these particle tracks, physicist Mia Liu is developing neural networks that can analyze the relationships between points in an image of an event, similar to mapping relationships between people in a social network. She plans to implement this system in CMS in 2028. “That impacts our physics program at large,” says Liu, an assistant professor at Purdue University. “Now we have tracks in the hardware trigger level, and you can do a lot of online reconstruction of the particles.”

Even the most advanced trigger systems, though, are still not physicists. And without an understanding of physics, the algorithms can make decisions that conflict with reality—say, saving an event in which a particle seems to move faster than light.

“The real worry is it’s getting it right for reasons you don’t know,” Miller says. “Then when it starts getting it wrong, you don’t have the rationale.”

To address this, Miller took inspiration from a groundbreaking algorithm that predicts how proteins fold. The system, developed by Google’s DeepMind, has a built-in understanding of symmetry that prevents it from predicting shapes that aren’t possible in nature.

Miller is trying to create trigger algorithms that have a similar understanding of physics, which he calls “self-driving triggers.” A person should, ideally, be able to understand why a self-driving car decided to turn left at a stop sign. Similarly, Miller says, a self-driving trigger should make physics-based decisions that are understandable to a physicist.

“What if these algorithms could tell you what about the data made them think it was worth saving?” Miller says. “The hope is it’s not only more efficient but also more trustworthy.”